3D Ray Tracer Engine

Building reliability with testing discipline – a Java ray tracing engine that taught me QA principles through photorealistic rendering

The Story

The Question

"How do you debug a broken reflection?"

You can't just `console.log()` a ray bouncing through a 3D scene. You can't step through what the eye sees. When the mirror looks wrong, when shadows don't fall right, when colors bleed where they shouldn't – the visual output is your only truth.

This is where I learned what Empathy-Driven QA really means.

The Challenge

This wasn't just "build a rendering engine." This was a progressive journey through Software Engineering principles, where each phase added complexity:

Phase 1: Basic primitives (Point, Vector, Ray) Phase 2: TDD with JUnit – testing before seeing Phase 3: Ray-geometry intersections Phase 4: Camera with Builder pattern Phase 5: Lights and rendering pipeline Phase 6: Phong lighting model Phase 7: Shadows, reflections, transparency Phase 8: Anti-aliasing – the breakthrough

With each phase, the question became harder: How do you know it's right before you see it?

This is Empathy-Driven QA mindset in action: build reliability from first principles, one verifiable step at a time.

The Turning Point

Then came anti-aliasing. Look at these edges:

The jagged edges on the left aren't just "ugly" – they're friction. They break the illusion. They remind the viewer they're looking at pixels, not reality.

But solving it meant asking: What's the cost?

- No anti-aliasing: 5.8 seconds - 81 samples per pixel: 232 seconds (40× slower) - 324 samples: 928 seconds (160× slower)

This is the exact tradeoff you face in CI/CD pipelines: How much testing is enough? Fast feedback vs. thorough coverage. Developer experience vs. production confidence.

I implemented three sampling strategies (regular grid, random, jittered) and measured everything. The jittered pattern became the default – best visual quality for the performance cost.

The Architecture

I didn't just write code that works. I wrote code I could trust in 6 months:

- 7 Design Patterns (Builder, Strategy, Composite, Template Method, Null Object, Factory Method, Flyweight) - 100+ JUnit tests with TDD methodology - Immutable primitives for thread safety and easier testing - Defensive programming with validation on every public method - Builder pattern for developer empathy and robust validation - Performance profiling built into the test suite

Every architectural decision asked: "Will the next developer understand this? Will tests catch regressions?"

The Connection to QA

This project taught me the philosophy I bring to QA Automation:

1. You can't test quality in – you build it in from the start 2. Measure everything – performance data informs decisions 3. Fail fast, fail clearly – defensive programming guides users to correct usage 4. Empathy for the end user – anti-aliasing is about viewer comfort, not just "pretty pictures" 5. The best code isn't the cleverest – it's the code you can trust in 6 months

When I write test automation, I apply these same principles: immutable test data, clear failure messages, performance benchmarks, and architecture that makes adding tests easy for the next person.

Technologies Used

Key Features

- Multiple 3D geometries: Sphere, Plane, Triangle, Polygon, Cylinder, Tube with precise intersection algorithms

- Advanced lighting models: Ambient, Directional, Point, and Spot lights with realistic attenuation

- Phong reflection model with diffuse and specular components for photorealistic materials

- Recursive ray tracing for reflections and refractions up to 10 levels deep

- Super-sampling anti-aliasing with three configurable patterns (Grid, Random, Jittered)

Technical Highlights

- Test-Driven Development with 100+ JUnit tests using equivalence partitioning and boundary value analysis

- 7 Design Patterns applied (Builder, Strategy, Composite, Template Method, Null Object, Factory Method, Flyweight)

- Immutable primitives (Point, Vector, Ray, Color) for thread safety and easier testing

- Performance benchmarking built into test suite with 40× and 160× measurements for anti-aliasing

- Defensive programming with input validation on every public method and meaningful error messages

Challenges & Solutions

Testing Without Visual Feedback

Built comprehensive JUnit test suite that validates ray-geometry intersections, light calculations, and color mixing before rendering. Used equivalence partitioning and boundary value analysis to catch edge cases. Created reference point calculations that could be verified mathematically, enabling true Test-Driven Development for graphics code.

Anti-Aliasing Quality vs Performance Tradeoff

Designed three sampling strategies (Grid, Random, Jittered) and measured everything. Built performance profiling into the test suite to track render times. The data showed Jittered sampling provided the best visual quality per performance cost, becoming the default. This mirrors CI/CD optimization: measure, compare, choose based on data.

Debugging Invisible Errors

When you can't `console.log()` a broken reflection, you need defensive programming. Implemented input validation on every public method, immutable primitives to prevent side effects, and meaningful error messages that guide developers to the source. Result: bugs caught at compile-time or in unit tests, not in rendered images.

Impact & Results

For the project:

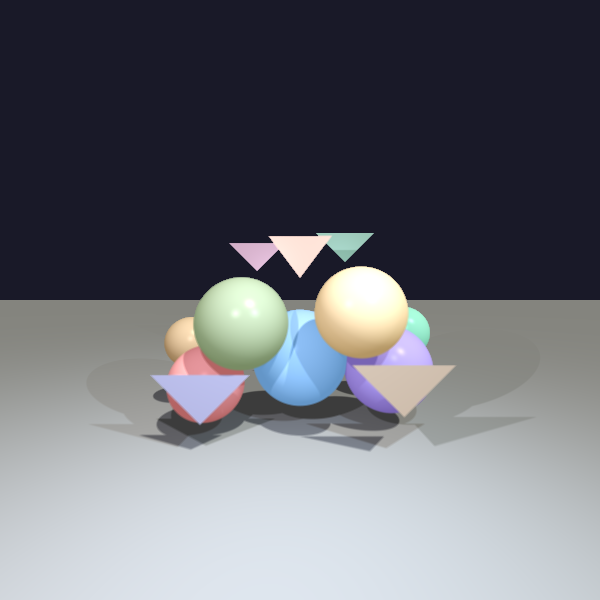

28 photorealistic rendered scenes, from basic spheres to complex pianos with realistic lighting, shadows, reflections, and smooth anti-aliased edges.

For my engineering practice:

This project crystallized the principles I bring to QA Automation: - Build quality in through architecture (7 design patterns) - Test before you see with TDD (100+ unit tests) - Measure performance to make informed tradeoffs - Write code for humans with defensive programming and clear errors - Empathy-driven decisions balancing quality, performance, and developer experience

For my QA mindset:

> "The best code isn't the cleverest – it's the code you can trust in 6 months."

When shadows fell correctly without debugging, when anti-aliasing worked on the first render, when tests caught regressions before I saw them – that's when I understood: reliability isn't tested in, it's built in from day one.